Presubmit And Performance

September 18th, 2008 | Published in Google Testing

Posted by Marc Kaplan, Test Engineering Manager

When looking at whether to check a new change in, there are several testing-related questions that can be asked, which at least include:

1. Does the new functionality work?

2. Did this change break existing functionality?

3. What is the performance impact of this change?

We can either answer these questions before we check things in, or after. We found that we can save a lot of time and effort in trying to do detective work and track bad changes down if we do this before check in to the depot. In most teams developers kick off the tests before they check anything in, but in case somebody forgets, or doesn't run all of the relevant tests, a more automated system was desired. So we have what we call presubmit systems at Google to run all of the relevant tests in an automated fashion pre-checkin, without the developer doing anything before the code is actually checked in. The idea behind this is that once the code is finished being written there are minutes or hours before it's checked in while it's being reviewed via the Google code review process (more info on that at Guido's Tech Talk on Mondrian). So we have taken advantage of this time by running tests via the presubmit system that finds all of the tests that are relevant to the change, runs the tests, and reports the results.

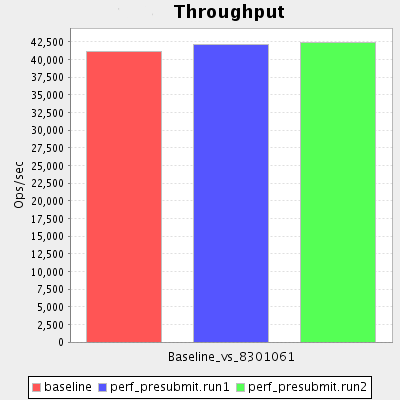

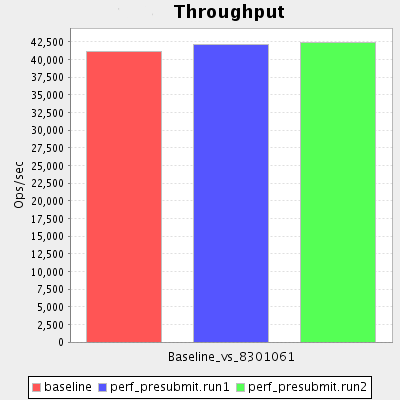

Most of the work around presubmit at Google has been looking at functionality (question 1, and 2 above), however in the GFS Team where we are making a lot of performance-related changes we wondered whether a performance presubmit system would be possible. So recently we developed such a system and have begun to start using it. Basically a hook was added to the code review process such that once a developer sends out a change for review, and automated performance test is started via the GFS Performance Testing Tool that we previously described on another Testing Blog post. Once two runs of the performance test are finished, they are compared against a known-good baseline that gets automatically created at the beginning of each week, and a comparison graph is generated and e-mailed to the user submitting the CL. So now we know, prior to checking some code in if it has some unexpected hit to performance. Additionally, now when a developer wants to make a change they think will improve performance they don't need to do anything other than a normal code review which will trigger an automatic performance test to see if they got the performance bump they were hoping for.

Example of a performance presubmit report:

I've seen many companies and projects where performance testing is cumbersome and not run very often until near release date. As you might expect, this is very problematic because once you find a performance bug you have to go back through all of the changes and try to narrow it down, and many times it's simply not that easy. Doing the performance testing early, and often helps narrow things down, but we've found that it's even better to make sure that these performance regressions never creep into the code with pre-check in performance testing. Certainly you should still do a final performance test once this code is frozen, but with the presubmit performance testing system in place you are essentially certain that the performance will be as good as you expect it to be.

When looking at whether to check a new change in, there are several testing-related questions that can be asked, which at least include:

1. Does the new functionality work?

2. Did this change break existing functionality?

3. What is the performance impact of this change?

We can either answer these questions before we check things in, or after. We found that we can save a lot of time and effort in trying to do detective work and track bad changes down if we do this before check in to the depot. In most teams developers kick off the tests before they check anything in, but in case somebody forgets, or doesn't run all of the relevant tests, a more automated system was desired. So we have what we call presubmit systems at Google to run all of the relevant tests in an automated fashion pre-checkin, without the developer doing anything before the code is actually checked in. The idea behind this is that once the code is finished being written there are minutes or hours before it's checked in while it's being reviewed via the Google code review process (more info on that at Guido's Tech Talk on Mondrian). So we have taken advantage of this time by running tests via the presubmit system that finds all of the tests that are relevant to the change, runs the tests, and reports the results.

Most of the work around presubmit at Google has been looking at functionality (question 1, and 2 above), however in the GFS Team where we are making a lot of performance-related changes we wondered whether a performance presubmit system would be possible. So recently we developed such a system and have begun to start using it. Basically a hook was added to the code review process such that once a developer sends out a change for review, and automated performance test is started via the GFS Performance Testing Tool that we previously described on another Testing Blog post. Once two runs of the performance test are finished, they are compared against a known-good baseline that gets automatically created at the beginning of each week, and a comparison graph is generated and e-mailed to the user submitting the CL. So now we know, prior to checking some code in if it has some unexpected hit to performance. Additionally, now when a developer wants to make a change they think will improve performance they don't need to do anything other than a normal code review which will trigger an automatic performance test to see if they got the performance bump they were hoping for.

Example of a performance presubmit report:

I've seen many companies and projects where performance testing is cumbersome and not run very often until near release date. As you might expect, this is very problematic because once you find a performance bug you have to go back through all of the changes and try to narrow it down, and many times it's simply not that easy. Doing the performance testing early, and often helps narrow things down, but we've found that it's even better to make sure that these performance regressions never creep into the code with pre-check in performance testing. Certainly you should still do a final performance test once this code is frozen, but with the presubmit performance testing system in place you are essentially certain that the performance will be as good as you expect it to be.