Multi-Repository Development

May 15th, 2015 | Published in Google Testing

Author: Patrik Höglund

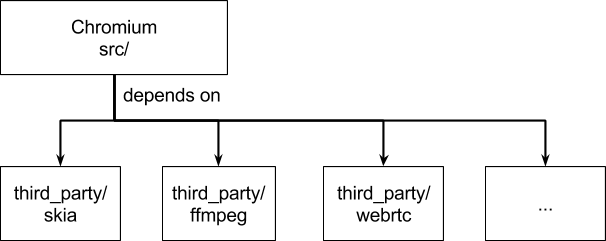

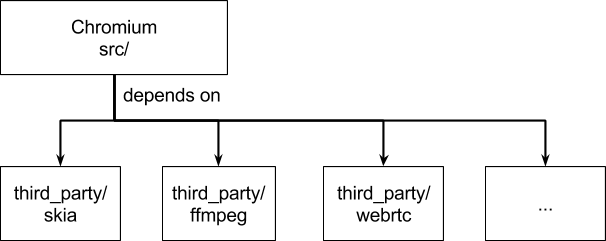

As we all know, software development is a complicated activity where we develop features and applications to provide value to our users. Furthermore, any nontrivial modern software is composed out of other software. For instance, the Chrome web browser pulls roughly a hundred libraries into its third_party folder when you build the browser. The most significant of these libraries is Blink, the rendering engine, but there’s also ffmpeg for image processing, skia for low-level 2D graphics, and WebRTC for real-time communication (to name a few).

There are many reasons to use software libraries. Why write your own phone number parser when you can use libphonenumber, which is battle-tested by real use in Android and Chrome and available under a permissive license? Using such software frees you up to focus on the core of your software so you can deliver a unique experience to your users. On the other hand, you need to keep your application up to date with changes in the library (you want that latest bug fix, right?), and you also run a risk of such a change breaking your application. This article will examine that integration problem and how you can reduce the risks associated with it.

One way to mitigate the update risk is to integrate more often with your dependencies. As an extreme example, let’s look at Chrome.

In Chrome development, there’s a massive amount of change going into its dependencies. The Blink rendering engine lives in a separate code repository from the browser. Blink sees hundreds of code changes per day, and Chrome must integrate with Blink often since it’s an important part of the browser. Another example is the WebRTC implementation, where a large part of Chrome’s implementation resides in the webrtc.org repository. This article will focus on the latter because it’s the team I happen to work on.

The above means we should pull WebRTC from the specified git repository at the 572703... hash, similar to other dependency-provisioning frameworks. To build Chrome with a new version, we change the hash and check in a new version of the DEPS file. If the library’s API has changed, we must update Chrome to use the new API in the same patch. This process is known as rolling WebRTC to a new version.

Now the problem is that we have changed the code going into Chrome. Maybe getUserMedia has started crashing on Android, or maybe the browser no longer boots on Windows. We don’t know until we have built and run all the tests. Therefore a roll patch is subject to the same presubmit checks as any Chrome patch (i.e. many tests, on all platforms we ship on). However, roll patches can be considerably more painful and risky than other patches.

On the WebRTC team we found ourselves in an uncomfortable position a couple years back. Developers would make changes to the webrtc.org code and there was a fair amount of churn in the interface, which meant we would have to update Chrome to adapt to those changes. Also we frequently broke tests and WebRTC functionality in Chrome because semantic changes had unexpected consequences in Chrome. Since rolls were so risky and painful to make, they started to happen less often, which made things even worse. There could be two weeks between rolls, which meant Chrome was hit by a large number of changes in one patch.

All the existing Chrome bots at that point would build Chrome as specified in the DEPS file, which meant they would build the WebRTC version we had rolled to up to that point. FYI bots replace that pinned version with WebRTC HEAD, but otherwise build and run Chrome-level tests as usual. Therefore:

The solution was to move to a more careful update policy for the WebRTC API. For the more technically inclined, “careful” here means “following the API prime directive” [2]. Consider this example:

Normally we would just change the method’s signature when we needed to:

… but this would compile-break Chome until it could be updated. So we started doing it like this instead:

Then we could:

Another benefit of FYI bots is more granular performance tests. Before the FYI bots, it would frequently happen that a bunch of metrics regressed. However, it’s not fun to find which of the 100 patches in the roll caused the regression! With the FYI bots, we can see precisely which WebRTC revision caused the problem.

To pull auto-rolling off, you are going to need very good tests. That goes for any roll patch (or any patch, really), but if you’re edging closer to a release and an unstoppable flood of code changes keep breaking you, you’re not in a good place.

[2] Dani Megert, Remy Chi Jian Suen, et. al. (Oct 2014) “Evolving Java-based APIs”

As we all know, software development is a complicated activity where we develop features and applications to provide value to our users. Furthermore, any nontrivial modern software is composed out of other software. For instance, the Chrome web browser pulls roughly a hundred libraries into its third_party folder when you build the browser. The most significant of these libraries is Blink, the rendering engine, but there’s also ffmpeg for image processing, skia for low-level 2D graphics, and WebRTC for real-time communication (to name a few).

Figure 1. Holy dependencies, Batman!

There are many reasons to use software libraries. Why write your own phone number parser when you can use libphonenumber, which is battle-tested by real use in Android and Chrome and available under a permissive license? Using such software frees you up to focus on the core of your software so you can deliver a unique experience to your users. On the other hand, you need to keep your application up to date with changes in the library (you want that latest bug fix, right?), and you also run a risk of such a change breaking your application. This article will examine that integration problem and how you can reduce the risks associated with it.

Updating Dependencies is Hard

The simplest solution is to check in a copy of the library, build with it, and avoid touching it as much as possible. This solution, however, can be problematic because you miss out on bug fixes and new features in the library. What if you need a new feature or bug fix that just made it in? You have a few options:- Update the library to its latest release. If it’s been a long time since you did this, it can be quite risky and you may have to spend significant testing resources to ensure all the accumulated changes don’t break your application. You may have to catch up to interface changes in the library as well.

- Cherry-pick the feature/bug fix you want into your copy of the library. This is even riskier because your cherry-picked patches may depend on other changes in the library in subtle ways. Also, you still are not up to date with the latest version.

- Find some way to make do without the feature or bug fix.

One way to mitigate the update risk is to integrate more often with your dependencies. As an extreme example, let’s look at Chrome.

In Chrome development, there’s a massive amount of change going into its dependencies. The Blink rendering engine lives in a separate code repository from the browser. Blink sees hundreds of code changes per day, and Chrome must integrate with Blink often since it’s an important part of the browser. Another example is the WebRTC implementation, where a large part of Chrome’s implementation resides in the webrtc.org repository. This article will focus on the latter because it’s the team I happen to work on.

How “Rolling” Works

The open-sourced WebRTC codebase is used by Chrome but also by a number of other companies working on WebRTC. Chrome uses a toolchain called depot_tools to manage dependencies, and there’s a checked-in text file called DEPS where dependencies are managed. It looks roughly like this:{

# ...

'src/third_party/webrtc':

'https://chromium.googlesource.com/' +

'external/webrtc/trunk/webrtc.git' +

'@' + '5727038f572c517204e1642b8bc69b25381c4e9f',

}

The above means we should pull WebRTC from the specified git repository at the 572703... hash, similar to other dependency-provisioning frameworks. To build Chrome with a new version, we change the hash and check in a new version of the DEPS file. If the library’s API has changed, we must update Chrome to use the new API in the same patch. This process is known as rolling WebRTC to a new version.

Now the problem is that we have changed the code going into Chrome. Maybe getUserMedia has started crashing on Android, or maybe the browser no longer boots on Windows. We don’t know until we have built and run all the tests. Therefore a roll patch is subject to the same presubmit checks as any Chrome patch (i.e. many tests, on all platforms we ship on). However, roll patches can be considerably more painful and risky than other patches.

Figure 2. Life of a Roll Patch.

On the WebRTC team we found ourselves in an uncomfortable position a couple years back. Developers would make changes to the webrtc.org code and there was a fair amount of churn in the interface, which meant we would have to update Chrome to adapt to those changes. Also we frequently broke tests and WebRTC functionality in Chrome because semantic changes had unexpected consequences in Chrome. Since rolls were so risky and painful to make, they started to happen less often, which made things even worse. There could be two weeks between rolls, which meant Chrome was hit by a large number of changes in one patch.

Bots That Can See the Future: “FYI Bots”

We found a way to mitigate this which we called FYI (for your information) bots. A bot is Chrome lingo for a continuous build machine which builds Chrome and runs tests.All the existing Chrome bots at that point would build Chrome as specified in the DEPS file, which meant they would build the WebRTC version we had rolled to up to that point. FYI bots replace that pinned version with WebRTC HEAD, but otherwise build and run Chrome-level tests as usual. Therefore:

- If all the FYI bots were green, we knew a roll most likely would go smoothly.

- If the bots didn’t compile, we knew we would have to adapt Chrome to an interface change in the next roll patch.

- If the bots were red, we knew we either had a bug in WebRTC or that Chrome would have to be adapted to some semantic change in WebRTC.

Making Gradual Interface Changes

This solution helped but wasn’t quite satisfactory. We initially had the policy that it was fine to break the FYI bots since we could not update Chrome to use a new interface until the new interface had actually been rolled into Chrome. This, however, often caused the FYI bots to be compile-broken for days. We quickly started to suffer from red blindness [1] and had no idea if we would break tests on the roll, especially if an interface change was made early in the roll cycle.The solution was to move to a more careful update policy for the WebRTC API. For the more technically inclined, “careful” here means “following the API prime directive” [2]. Consider this example:

class WebRtcAmplifier {

...

int SetOutputVolume(float volume);

}

Normally we would just change the method’s signature when we needed to:

class WebRtcAmplifier {

...

int SetOutputVolume(float volume, bool allow_eleven1);

}

… but this would compile-break Chome until it could be updated. So we started doing it like this instead:

class WebRtcAmplifier {

...

int SetOutputVolume(float volume);

int SetOutputVolume2(float volume, bool allow_eleven);

}

Then we could:

- Roll into Chrome

- Make Chrome use SetOutputVolume2

- Update SetOutputVolume’s signature

- Roll again and make Chrome use SetOutputVolume

- Delete SetOutputVolume2

Results

When we implemented the above, we could fix problems as they came up rather than in big batches on each roll. We could institute the policy that the FYI bots should always be green, and that changes breaking them should be immediately rolled back. This made a huge difference. The team could work smoother and roll more often. This reduced our risk quite a bit, particularly when Chrome was about to cut a new version branch. Instead of doing panicked and risky rolls around a release, we could work out issues in good time and stay in control.Another benefit of FYI bots is more granular performance tests. Before the FYI bots, it would frequently happen that a bunch of metrics regressed. However, it’s not fun to find which of the 100 patches in the roll caused the regression! With the FYI bots, we can see precisely which WebRTC revision caused the problem.

Future Work: Optimistic Auto-rolling

The final step on this ladder (short of actually merging the repositories) is auto-rolling. The Blink team implemented this with their ARB (AutoRollBot). The bot wakes up periodically and tries to do a roll patch. If it fails on the trybots, it waits and tries again later (perhaps the trybots failed because of a flake or other temporary error, or perhaps the error was real but has been fixed).To pull auto-rolling off, you are going to need very good tests. That goes for any roll patch (or any patch, really), but if you’re edging closer to a release and an unstoppable flood of code changes keep breaking you, you’re not in a good place.

References

[1] Martin Fowler (May 2006) “Continuous Integration”[2] Dani Megert, Remy Chi Jian Suen, et. al. (Oct 2014) “Evolving Java-based APIs”

Footnotes

- We actually did have a hilarious bug in WebRTC where it was possible to set the volume to 1.1, but only 0.0-1.0 was supposed to be allowed. No, really. Thus, our WebRTC implementation must be louder than the others since everybody knows 1.1 must be louder than 1.0.