The 2009 Semantic Robot Vision Challenge

January 5th, 2010 | Published in Google Open Source

The Semantic Robot Vision Challenge (SRVC) is a robot scavenger hunt competition that is designed to push the state of the art in image understanding and automatic acquisition of knowledge from large unstructured databases of images (such as those generally found on the web). In this competition, fully autonomous robots receive a text list of objects that they are to find. They use the web to automatically find image examples of those objects in order to learn visual models. These visual models are then used to identify the objects in the robot's cameras.

The lastest SRVC was hosted at the International Symposium for Visual Computing (ISVC) in Las Vegas Nevada from Nov 31 to Dec 2, 2009. Five individual teams competed this year and hree of the teams brought robots and participated in both the robot and software league. The other two teams participated only in the software league.

The arena was set up with four chairs, three round tables, two tables with drawers, and a small set of stairs for displaying objects. All of the furniture had at least one object for the robots to discover on it, but not all of the objects in the environment were on the list of items for the robots to find.

The crowd was very interested in watching the different robots moving around the environment during their runs. Unfortunately, the robot teams themselves were plagued with various hardware and software integration troubles and only one team was able to find any objects. However, the robot teams that did not perform well demonstrated that their software was very capable of doing the work in a stand-alone mode. The visual classification results from the software league were very impressive.

The official list of objects consisted of:

The US Naval Academy entered a robot based on a iRobot Create platform which used a Hokuyo URG LIDAR for navigation and a camera mounted on a mast for ddetecting the objects. This robot was by far the least expensive of the competitors but was still capable of carrying a laptop as well as the other hardware. However, under this load, the robot rapidly drained its batteries but was still able to capture a few images of objects and label them correctly.

Kansas State University entered with a robot based on a MobileRobots Inc. Pioneer 3 platform. They also had a Hokuyo URG LIDAR for navigation and a camera on a mast used for identifying the objects in the environment. This robot was able to traverse most of the environment successfully. Unfortunately, the robot was not able to aim its camera at enough objects to get a chance to correctly identify them.

The University of British Columbia (UBC) robot had by far the most complex setup of all of the robot competitors. They used a MobileRobots Inc. Powerbot that carried four laptops, multiple cameras--including a monocular PowerShot Canon camera, and a Pt. Grey Bumblebee2 stereo camera, and multiple LIDARs both for navigation and object extraction. The team demonstrated several impressive non-scored runs both before and after the event. However, during their officially scored event, the process that ran the primary object detection camera failed and so they were unable to identify any objects.

For more detailed descriptions of the robots, the software, and the computer vision techniques used by these teams, please refer to the team presentations. Each team's workshop presentation has been posted to that page. Links to their source code will also be posted.

As this contest continues to grow and evolve, the organizers are quite pleased by the progress of the computer vision research that is being demonstrated at these events. This was shown quite handily by the very high scores in the software-only league. However, the organizers would also like to remind the community that this is a robotics competition and thus want to see advances in active vision techniques, intelligent mapping and exploration, and reasoning about where objects are likely to be found (e.g. the "semantics" of the objects). In previous years, most of the robotics competitors took a random-walk approach to exploring the environment where they would hope to cover all of the space and get enough images to see the objects in question. However, the organizers this year were quite pleased to see the previous reigning champions from the University of British Columbia take the robotic exploration aspect of the competition to the next level. The organizers would like to take the time to highlight some of the significant aspects of the UBC team's approach to how to control their robot.

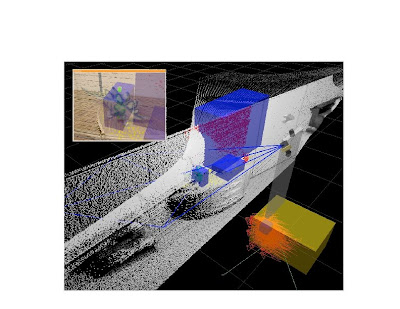

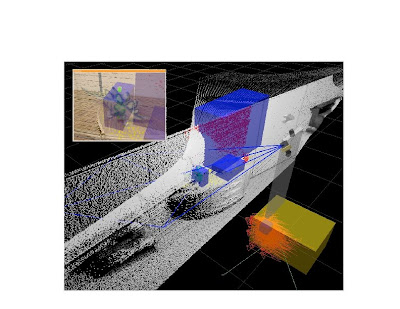

The UBC team approached the contest in two distinct phases: a mapping phase, and an object identification phase. The strategy of UBC this year was first to navigate the environment and map it using the SICK LIDAR and a SLAM algorithm (Simultaneous Localization and Mapping). Then the robot would revisit the obstacles in the room and scan them with the Hokuyo LIDAR. Flat horizontal surfaces would be detected in the scans from detection of a few consistent surface normals and a verification stage of the hypothesis of a planar surface. The regions that point out of the plane are interpreted as objects, and 3D bounding are computed from their convex hull (see figure below). This gives a set of candidate locations for the objects. The robot then would revisit these locations to take snapshots and run its object recognition algorithms on these snapshots. Three object recognition methods were implemented, SIFT matching, Contour matching, Deformable Parts Models (DPM). A fourth one using spherical harmonics to recognize 3D data was turned off because it was not quite ready. The DPM approach was trained on the objects known in advance, but could not be used for internet images as it was slightly too slow for that even though it had been rewritten in C.

The organizers were very impressed by the fact that the robot would first identify the specific locations where objects should be found, e.g. the tops of tables and chairs, and then go back and use the 3D sensors to explicitly segment out the locations of the objects to find them. This is exactly the kind of active robotics vision research that we feel will help to push forward the state of the art in real-time computer vision on physical robots and we hope to see more of this kind of approach on future competitors.

The organizers were very impressed by the fact that the robot would first identify the specific locations where objects should be found, e.g. the tops of tables and chairs, and then go back and use the 3D sensors to explicitly segment out the locations of the objects to find them. This is exactly the kind of active robotics vision research that we feel will help to push forward the state of the art in real-time computer vision on physical robots and we hope to see more of this kind of approach on future competitors.

To sum up, the research being performed by the teams interested in this competition is extremely impressive. The teams are definitely rising to the challenge put forth by the organizers. Congratulations to all that participated!

The lastest SRVC was hosted at the International Symposium for Visual Computing (ISVC) in Las Vegas Nevada from Nov 31 to Dec 2, 2009. Five individual teams competed this year and hree of the teams brought robots and participated in both the robot and software league. The other two teams participated only in the software league.

The arena was set up with four chairs, three round tables, two tables with drawers, and a small set of stairs for displaying objects. All of the furniture had at least one object for the robots to discover on it, but not all of the objects in the environment were on the list of items for the robots to find.

The crowd was very interested in watching the different robots moving around the environment during their runs. Unfortunately, the robot teams themselves were plagued with various hardware and software integration troubles and only one team was able to find any objects. However, the robot teams that did not perform well demonstrated that their software was very capable of doing the work in a stand-alone mode. The visual classification results from the software league were very impressive.

The official list of objects consisted of:

- pumpkin

- orange

- red ping pong paddle

- white soccer ball

- laptop

- dinosaur

- bottle

- toy car

- frying pan

- book "I am a Strange Loop" by Douglas Hofstadter

- book "Fugitive from the Cubicle Police"

- book "Photoshop in a Nutshell"

- CD "And Winter Came" by Enya

- CD "The Essential Collection" by Karl Jenkins and Adiemus

- DVD "Hitchhiker's Guide to the Galaxy" widescreen

- game "Call of Duty 4" box

- toy Domo-kun

- Lay's Classic Potato Chips

- Pepperidge Farm Goldfish Baked Snack Crackers

- Pepperidge Farm Milano Distinctive Cookies

The US Naval Academy entered a robot based on a iRobot Create platform which used a Hokuyo URG LIDAR for navigation and a camera mounted on a mast for ddetecting the objects. This robot was by far the least expensive of the competitors but was still capable of carrying a laptop as well as the other hardware. However, under this load, the robot rapidly drained its batteries but was still able to capture a few images of objects and label them correctly.

Kansas State University entered with a robot based on a MobileRobots Inc. Pioneer 3 platform. They also had a Hokuyo URG LIDAR for navigation and a camera on a mast used for identifying the objects in the environment. This robot was able to traverse most of the environment successfully. Unfortunately, the robot was not able to aim its camera at enough objects to get a chance to correctly identify them.

The University of British Columbia (UBC) robot had by far the most complex setup of all of the robot competitors. They used a MobileRobots Inc. Powerbot that carried four laptops, multiple cameras--including a monocular PowerShot Canon camera, and a Pt. Grey Bumblebee2 stereo camera, and multiple LIDARs both for navigation and object extraction. The team demonstrated several impressive non-scored runs both before and after the event. However, during their officially scored event, the process that ran the primary object detection camera failed and so they were unable to identify any objects.

For more detailed descriptions of the robots, the software, and the computer vision techniques used by these teams, please refer to the team presentations. Each team's workshop presentation has been posted to that page. Links to their source code will also be posted.

As this contest continues to grow and evolve, the organizers are quite pleased by the progress of the computer vision research that is being demonstrated at these events. This was shown quite handily by the very high scores in the software-only league. However, the organizers would also like to remind the community that this is a robotics competition and thus want to see advances in active vision techniques, intelligent mapping and exploration, and reasoning about where objects are likely to be found (e.g. the "semantics" of the objects). In previous years, most of the robotics competitors took a random-walk approach to exploring the environment where they would hope to cover all of the space and get enough images to see the objects in question. However, the organizers this year were quite pleased to see the previous reigning champions from the University of British Columbia take the robotic exploration aspect of the competition to the next level. The organizers would like to take the time to highlight some of the significant aspects of the UBC team's approach to how to control their robot.

The UBC team approached the contest in two distinct phases: a mapping phase, and an object identification phase. The strategy of UBC this year was first to navigate the environment and map it using the SICK LIDAR and a SLAM algorithm (Simultaneous Localization and Mapping). Then the robot would revisit the obstacles in the room and scan them with the Hokuyo LIDAR. Flat horizontal surfaces would be detected in the scans from detection of a few consistent surface normals and a verification stage of the hypothesis of a planar surface. The regions that point out of the plane are interpreted as objects, and 3D bounding are computed from their convex hull (see figure below). This gives a set of candidate locations for the objects. The robot then would revisit these locations to take snapshots and run its object recognition algorithms on these snapshots. Three object recognition methods were implemented, SIFT matching, Contour matching, Deformable Parts Models (DPM). A fourth one using spherical harmonics to recognize 3D data was turned off because it was not quite ready. The DPM approach was trained on the objects known in advance, but could not be used for internet images as it was slightly too slow for that even though it had been rewritten in C.

To sum up, the research being performed by the teams interested in this competition is extremely impressive. The teams are definitely rising to the challenge put forth by the organizers. Congratulations to all that participated!