Seesaw: scalable and robust load balancing

January 29th, 2016 | by Open Source Programs Office | published in Google Open Source

Like all good projects, this one started out because we had an itch to scratch…

January 29th, 2016 | by Open Source Programs Office | published in Google Open Source

Like all good projects, this one started out because we had an itch to scratch…

January 28th, 2016 | by Jane Smith | published in Google Apps

This week, we added 54 additional country-based holiday calendars to the Google Calendar Android and iOS apps. In total, you can now get 143 holiday calendars directly on your mobile calendar.To add or change the national holidays you see on your cale…

January 28th, 2016 | by Jane Smith | published in Google Apps

Getting started

Visit the Help Center to get the full instructions for web, Android, and iOS.

The newly supported languages are:

Arabic (ar), Chinese (Simplified) (zh-CN), Chinese (Traditional) (zh-TW), Dutch (nl), English (UK) (en-GB), French (fr), German (de), Italian (it), Japanese (ja), Korean (ko), Polish (pl), Portuguese (Brazil) (pt-BR), Russian (ru), Spanish (es), Spanish (Latin America) (es-419), Thai (th), Turkish (tr), Bulgarian (bg), Catalan (ca), Croatian (hr), Czech (cs), Danish (da), Farsi (fa), Filipino (fil), Finnish (fi), Greek (el), Hebrew (iw), Hindi (hi), Hungarian (hu), Indonesian (id), Latvian (lv), Lithuanian (lt), Norwegian (Bokmal) (no), Portuguese (Portugal) (pt-PT), Romanian (ro), Serbian (sr), Slovak (sk), Slovenian (sl), Swedish (sv), Ukrainian (uk), Vietnamese (vi)

Launch Details

Release track:

Launching to both Rapid release and Scheduled release

Rollout pace:

Full rollout (1-3 days for feature visibility)

Impact:

All end users

Action:

Change management suggested/FYI

More Information

Help Center

Note: all launches are applicable to all Google Apps editions unless otherwise noted

Launch release calendar

Launch detail categories

Get these product update alerts by email

Subscribe to the RSS feed of these updates

January 28th, 2016 | by Rob Newton | published in Google Adwords

Customers like The Honest Company, MuleSoft, and PMG use the AdWords app to easily manage their campaigns, stay in touch with the needs of their customers, and quickly access important business insights – from anywhere.

“Amidst the hectic holiday festivities, this app saved me from having to leave the dinner table to monitor performance and make quick changes to my accounts. That meant more time with my family. I’m excited for what’s to come!”

– Josh Franklin, Manager, Search Marketing, The Honest Company

“The app helps me access high level data on the go which can come in handy in the boardroom, or anytime I need to quickly understand how our campaigns are performing. Also, having the ability to make adjustments to our campaigns – such as changing bids and budget – is invaluable.”

– Nima Asrar Haghighi, Director, Digital Marketing & Analytics, MuleSoft

“The consumer shift to mobile means our retail clients’ campaigns have to be responsive to meet the needs of consumers at all times of the day. The app makes it easy for us to address issues without being chained to our laptops. PMG has been able to deliver prompt account adjustments from campaign to keyword level for our clients, as well as keep our customer satisfaction rates high.”

– Kyle Knox, Account Manager, PMG

Get started

You can learn more about the AdWords app in the AdWords Help Center.

Posted by Sugeeti Kochhar, Product Manager, AdWords

January 28th, 2016 | by Adam Singer | published in Google Analytics

January 28th, 2016 | by Sarah H | published in Google Student Blog

January 28th, 2016 | by Google Security PR | published in Google Online Security

Posted by Eduardo Vela Nava, Google Security

We launched our Vulnerability Reward Program in 2010 because rewarding security researchers for their hard work benefits everyone. These financial rewards help make our services, and the web as a whole, safer and more secure.

With an open approach, we’re able to consider a broad diversity of expertise for individual issues. We can also offer incentives for external researchers to work on challenging, time-consuming, projects that otherwise may not receive proper attention.

Last January, we summarized these efforts in our first ever Security Reward Program ‘Year in Review’. Now, at the beginning of another new year, we wanted to look back at 2015 and again show our appreciation for researchers’ important contributions.

2015 at a Glance

Once again, researchers from around the world—Great Britain, Poland, Germany, Romania, Israel, Brazil, United States, China, Russia, India to name a few countries—participated our program.

Here’s an overview of the rewards they received and broader milestones for the program, as a whole.

January 27th, 2016 | by Jane Smith | published in Google Apps, Google Docs

Check out the Help Center articles below for more information.

Note: all launches are applicable to all Google Apps editions unless otherwise noted

Launch release calendar

Launch detail categories

Get these product update alerts by email

Subscribe to the RSS feed of these updates

January 27th, 2016 | by Jane Smith | published in Google Apps, Google Docs

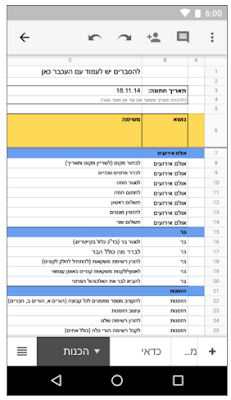

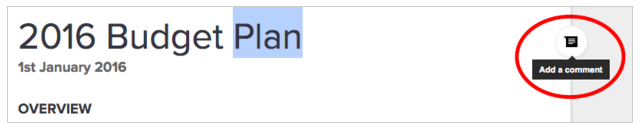

Try out these new features in Google Docs, Sheets, and Slides, and collaborate with fewer clicks!

Launch Details

Release track:

Mobile features – Launching to both Rapid release and Scheduled release

Web features – Launching to Rapid release, with Scheduled release coming on February 10th

*NOTE: Comments in the Google Sheets Android app launched on November 12th, 2015.

Rollout pace:

Full rollout (1–3 days for feature visibility)

Impact:

All end users

Action:

Change management suggested/FYI

More Information

Help Center: Comment in Docs, Sheets, & Slides

Google for Work Blog

Launch release calendar

Launch detail categories

Get these product update alerts by email

Subscribe to the RSS feed of these updates

January 27th, 2016 | by A Googler | published in Google Docs

When Jim, one of the engineers on the Google Slides team, brought a zucchini chocolate cake into the office last week, we knew we had to get the recipe.

So we asked him and his wife, Alison, to let us in on the family secret—just in time for Chocolate Cake Day. They worked together in Slides (mobile commenting across Google Docs just launched today!) to perfect the recipe. Alison writes:

Growing up, my grandma made zucchini chocolate cake often, especially when there was a surplus of zucchinis at the local farmer’s market. The cake is ridiculously moist and pairs well with many different frostings, though cream cheese is my favorite.

Thanks to mobile commenting, Jim and I went back and forth on the recipe—Jim on his Nexus 9, me on my iPhone—until we had it just right:

Check out our family recipe in Slides. We call it Straka’s Zucchini Chocolate Cake—in honor of my grandma.

Happy Chocolate Cake Day, from our family to yours.

Posted by Alison Zoll, chemist, baker and wife of Jim Zoll, Slides engineer

Get the apps on Android and iOS (Docs, Sheets, Slides)

January 27th, 2016 | by Google Blogs | published in Google Blog

The game of Go originated in China more than 2,500 years ago. Confucius wrote about the game, and it is considered one of the four essential arts required of any true Chinese scholar. Played by more than 40 million people worldwide, the rules of the game are simple: Players take turns to place black or white stones on a board, trying to capture the opponent’s stones or surround empty space to make points of territory. The game is played primarily through intuition and feel, and because of its beauty, subtlety and intellectual depth it has captured the human imagination for centuries.

But as simple as the rules are, Go is a game of profound complexity. There are 1,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000 possible positions—that’s more than the number of atoms in the universe, and more than a googol times larger than chess.

This complexity is what makes Go hard for computers to play, and therefore an irresistible challenge to artificial intelligence (AI) researchers, who use games as a testing ground to invent smart, flexible algorithms that can tackle problems, sometimes in ways similar to humans. The first game mastered by a computer was noughts and crosses (also known as tic-tac-toe) in 1952. Then fell checkers in 1994. In 1997 Deep Blue famously beat Garry Kasparov at chess. It’s not limited to board games either—IBM’s Watson [PDF] bested two champions at Jeopardy in 2011, and in 2014 our own algorithms learned to play dozens of Atari games just from the raw pixel inputs. But to date, Go has thwarted AI researchers; computers still only play Go as well as amateurs.

Traditional AI methods—which construct a search tree over all possible positions—don’t have a chance in Go. So when we set out to crack Go, we took a different approach. We built a system, AlphaGo, that combines an advanced tree search with deep neural networks. These neural networks take a description of the Go board as an input and process it through 12 different network layers containing millions of neuron-like connections. One neural network, the “policy network,” selects the next move to play. The other neural network, the “value network,” predicts the winner of the game.

We trained the neural networks on 30 million moves from games played by human experts, until it could predict the human move 57 percent of the time (the previous record before AlphaGo was 44 percent). But our goal is to beat the best human players, not just mimic them. To do this, AlphaGo learned to discover new strategies for itself, by playing thousands of games between its neural networks, and adjusting the connections using a trial-and-error process known as reinforcement learning. Of course, all of this requires a huge amount of computing power, so we made extensive use of Google Cloud Platform.

After all that training it was time to put AlphaGo to the test. First, we held a tournament between AlphaGo and the other top programs at the forefront of computer Go. AlphaGo won all but one of its 500 games against these programs. So the next step was to invite the reigning three-time European Go champion Fan Hui—an elite professional player who has devoted his life to Go since the age of 12—to our London office for a challenge match. In a closed-doors match last October, AlphaGo won by 5 games to 0. It was the first time a computer program has ever beaten a professional Go player. You can find out more in our paper, which was published in Nature today.

What’s next? In March, AlphaGo will face its ultimate challenge: a five-game challenge match in Seoul against the legendary Lee Sedol—the top Go player in the world over the past decade.

We are thrilled to have mastered Go and thus achieved one of the grand challenges of AI. However, the most significant aspect of all this for us is that AlphaGo isn’t just an “expert” system built with hand-crafted rules; instead it uses general machine learning techniques to figure out for itself how to win at Go. While games are the perfect platform for developing and testing AI algorithms quickly and efficiently, ultimately we want to apply these techniques to important real-world problems. Because the methods we’ve used are general-purpose, our hope is that one day they could be extended to help us address some of society’s toughest and most pressing problems, from climate modelling to complex disease analysis. We’re excited to see what we can use this technology to tackle next!

Posted by Demis Hassabis, Google DeepMind http://3.bp.blogspot.com/-UM6zXm-cXW4/VqkFrP32nlI/AAAAAAAARzw/HmxeOsYvvqk/s1600/Go-game_hero.jpg Demis HassabisGoogle DeepMind

January 27th, 2016 | by Research Blog | published in Google Research

Posted by David Silver and Demis Hassabis, Google DeepMind

Games are a great testing ground for developing smarter, more flexible algorithms that have the ability to tackle problems in ways similar to humans. Creating programs that are able to play games better than the best humans has a long history – the first classic game mastered by a computer was noughts and crosses (also known as tic-tac-toe) in 1952 as a PhD candidate’s project. Then fell checkers in 1994. Chess was tackled by Deep Blue in 1997. The success isn’t limited to board games, either – IBM’s Watson won first place on Jeopardy in 2011, and in 2014 our own algorithms learned to play dozens of Atari games just from the raw pixel inputs.

But one game has thwarted A.I. research thus far: the ancient game of Go. Invented in China over 2500 years ago, Go is played by more than 40 million people worldwide. The rules are simple: players take turns to place black or white stones on a board, trying to capture the opponent’s stones or surround empty space to make points of territory. Confucius wrote about the game, and its aesthetic beauty elevated it to one of the four essential arts required of any true Chinese scholar. The game is played primarily through intuition and feel, and because of its subtlety and intellectual depth it has captured the human imagination for centuries.

But as simple as the rules are, Go is a game of profound complexity. The search space in Go is vast — more than a googol times larger than chess (a number greater than there are atoms in the universe!). As a result, traditional “brute force” AI methods — which construct a search tree over all possible sequences of moves — don’t have a chance in Go. To date, computers have played Go only as well as amateurs. Experts predicted it would be at least another 10 years until a computer could beat one of the world’s elite group of Go professionals.

We saw this as an irresistible challenge! We started building a system, AlphaGo, described in a paper in Nature this week, that would overcome these barriers. The key to AlphaGo is reducing the enormous search space to something more manageable. To do this, it combines a state-of-the-art tree search with two deep neural networks, each of which contains many layers with millions of neuron-like connections. One neural network, the “policy network”, predicts the next move, and is used to narrow the search to consider only the moves most likely to lead to a win. The other neural network, the “value network”, is then used to reduce the depth of the search tree — estimating the winner in each position in place of searching all the way to the end of the game.

AlphaGo’s search algorithm is much more human-like than previous approaches. For example, when Deep Blue played chess, it searched by brute force over thousands of times more positions than AlphaGo. Instead, AlphaGo looks ahead by playing out the remainder of the game in its imagination, many times over – a technique known as Monte-Carlo tree search. But unlike previous Monte-Carlo programs, AlphaGo uses deep neural networks to guide its search. During each simulated game, the policy network suggests intelligent moves to play, while the value network astutely evaluates the position that is reached. Finally, AlphaGo chooses the move that is most successful in simulation.

We first trained the policy network on 30 million moves from games played by human experts, until it could predict the human move 57% of the time (the previous record before AlphaGo was 44%). But our goal is to beat the best human players, not just mimic them. To do this, AlphaGo learned to discover new strategies for itself, by playing thousands of games between its neural networks, and gradually improving them using a trial-and-error process known as reinforcement learning. This approach led to much better policy networks, so strong in fact that the raw neural network (immediately, without any tree search at all) can defeat state-of-the-art Go programs that build enormous search trees.

These policy networks were in turn used to train the value networks, again by reinforcement learning from games of self-play. These value networks can evaluate any Go position and estimate the eventual winner – a problem so hard it was believed to be impossible.

Of course, all of this requires a huge amount of compute power, so we made extensive use of Google Cloud Platform, which enables researchers working on AI and Machine Learning to access elastic compute, storage and networking capacity on demand. In addition, new open source libraries for numerical computation using data flow graphs, such as TensorFlow, allow researchers to efficiently deploy the computation needed for deep learning algorithms across multiple CPUs or GPUs.

So how strong is AlphaGo? To answer this question, we played a tournament between AlphaGo and the best of the rest – the top Go programs at the forefront of A.I. research. Using a single machine, AlphaGo won all but one of its 500 games against these programs. In fact, AlphaGo even beat those programs after giving them 4 free moves headstart at the beginning of each game. A high-performance version of AlphaGo, distributed across many machines, was even stronger.

|

| This figure from the Nature article shows the Elo rating and approximate rank of AlphaGo (both single machine and distributed versions), the European champion Fan Hui (a professional 2-dan), and the strongest other Go programs, evaluated over thousands of games. Pale pink bars show the performance of other programs when given a four move headstart. |

It seemed that AlphaGo was ready for a greater challenge. So we invited the reigning 3-time European Go champion Fan Hui — an elite professional player who has devoted his life to Go since the age of 12 — to our London office for a challenge match. The match was played behind closed doors between October 5-9 last year. AlphaGo won by 5 games to 0 — the first time a computer program has ever beaten a professional Go player.

AlphaGo’s next challenge will be to play the top Go player in the world over the last decade, Lee Sedol. The match will take place this March in Seoul, South Korea. Lee Sedol is excited to take on the challenge saying, “I am privileged to be the one to play, but I am confident that I can win.” It should prove to be a fascinating contest!

We are thrilled to have mastered Go and thus achieved one of the grand challenges of AI. However, the most significant aspect of all this for us is that AlphaGo isn’t just an ‘expert’ system built with hand-crafted rules, but instead uses general machine learning techniques to allow it to improve itself, just by watching and playing games. While games are the perfect platform for developing and testing AI algorithms quickly and efficiently, ultimately we want to apply these techniques to important real-world problems. Because the methods we have used are general purpose, our hope is that one day they could be extended to help us address some of society’s toughest and most pressing problems, from climate modelling to complex disease analysis.

January 27th, 2016 | by Reto Meier | published in Google Android

Posted by Lily Sheringham, Google Play team

Headquartered in Singapore, Wego is a popular online travel marketplace for flights and hotels for users in South East Asia and the Middle East. They launched their Android app in early 2014, and today, more than 62 percent of Wego app users are on Android. Wego recently redesigned their app using material design principles to provide their users a more native Android experience for consistency and easier navigation.

Watch Ross Veitch, co-founder and CEO, and the Wego team talk about how they increased monthly user retention by 300 percent and reduced uninstall rates by up to 25 percent with material design.

Learn more about material design, how to use Android Studio, and how to find success on Google Play with the new guide ‘Secrets to App Success on Google Play.’

January 27th, 2016 | by Chrome Blog | published in Google Chrome

Chrome gives you a fast and secure way to explore the web, no matter what device you’re using. To keep all of our users safe and to help them save on data usage, we now show 5 million Safe Browsing warning messages every day and have over 100 million people using Data Saver mode in Chrome on Android. This saves up to 100 Terabytes of data a day — enough data to store the complete works of Shakespeare, 10 million times!

The latest version of Chrome brings some fresh updates for the new year to get you moving faster and stay secure.

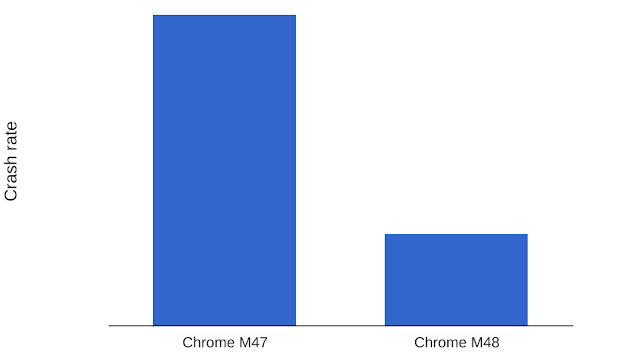

The latest Chrome for iOS is significantly faster and more stable, so you can pick up where you left off browsing (on any device) without worrying about Chrome crashing on misbehaving webpages. In fact, tests show that the latest version reduces Chrome’s crash rate by 70 percent and speeds up JavaScript execution significantly.

These improvements will gradually be rolled out starting today—just update Chrome to get rolling. (If you want a peek under the hood, check out this update in the Chromium blog.)

January 27th, 2016 | by Google Blogs | published in Google Blog

A year and a half ago we introduced Google Cardboard, a simple cardboard viewer that anyone can use to experience mobile virtual reality (VR). With just Cardboard and the smartphone in your pocket, you can travel to faraway places and visit imagined worlds. Since then everyone from droid lovers and Sunday edition subscribers, to big kids and grandmas have been able to enjoy VR—often for the very first time. Here’s a look at where we are, 19 months in:

1. 5 million Cardboard fans have joined the fold.

2. In just the past two months (October-December), you launched into 10 million more immersive app experiences:

3. Out of 1,000+ Cardboard apps on Google Play, one of your favorites got you screaming “aaaaaaahwsome,” while another “gave you goosebumps.”

4. You teleported to places far and wide, right from the comfort of YouTube.

5. Since we launched Cardboard Camera in December, you’ve captured more than 750,000 VR photos, letting you relive your favorite moments anytime, from anywhere.

6. Students around the world have taken VR field trips to the White House, the Republic of Congo, and 150 other places around the globe with Expeditions.

While you’ve been traveling the world and beyond with Cardboard, we’ve been on a journey, too. Keep your eyes peeled for more projects that bring creative, entertaining and educational experiences to mobile VR.

Posted by Clay Bavor, VP Virtual Reality http://2.bp.blogspot.com/-2lf4cAt9Xoo/VqkFrHZ5xxI/AAAAAAAARzs/E8ZK8l4EPo8/s1600/Google-Cardboard_hero.jpg Clay Bavor VP Virtual Reality